At Fullstory, we index and serve 100s of terabytes of data to power search, analytics, and data visualizations. To upgrade database versions, migrate schemas, swap out compute instances, or complete other movements requiring hard cutovers, we rely on a multi-step blue-green migration process of the underlying data -- all while continuing to serve high fidelity results in near real time. We happen to run several large Solr clusters for our primary database, but many of the principles discussed here should apply to other sharded databases.

Use Cases

Fullstory needs fast and safe migration tooling to perform infrastructure upgrades. Custom tooling is necessary due to the difficulty of upgrading stateful services, especially those with large and rapidly evolving datasets, like ours. Since building the end-to-end database migration process for our Solr cluster, we have run full migrations multiple times to provide a better infrastructure and ultimately a better product.

Version Upgrades

We have executed 2 major version upgrades of Solr under this migration process. We moved our entire dataset from Solr 6 to Solr 7 and then not too long after from Solr 7 to Solr 8. Version upgrades allow us to pick up on the latest features, improvements, and fixes.

Multiple Clusters

We have executed cluster splits into multiple Solr clusters moving a subset of customers to new clusters. Multiple clusters allow Fullstory to better accommodate growth alongside improved performance.

Hardware Upgrades

We have upgraded our hardware to the new N2D family VM instances built on AMD processors through Google Cloud. Modernizing our hardware improves performance.

Migration Process

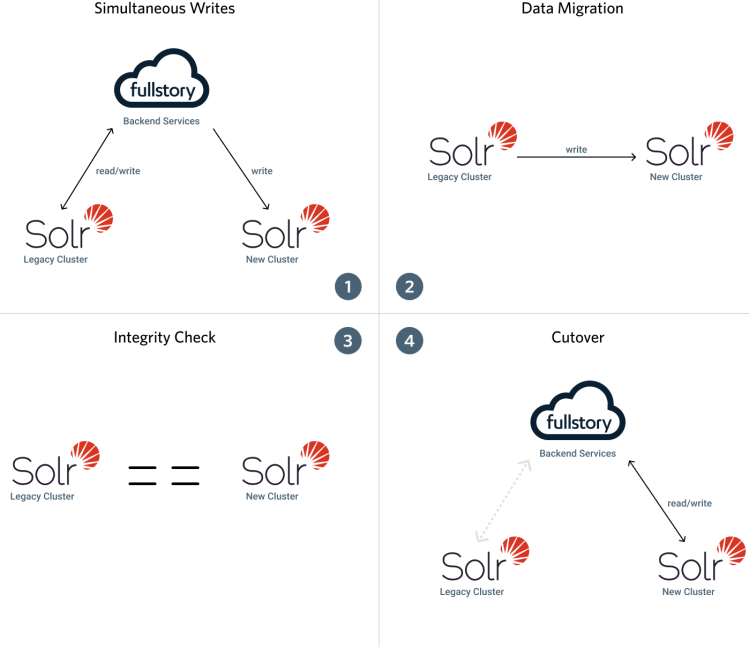

At a high level, the migration process looks like a standard blue-green deployment.

Migration process visualized by step.

1. Issue all writes to both the legacy and new Solr cluster.

All newly indexed data is written to both clusters. Live flows that perform computations or alter indexing based on the previous indexed state must account for both clusters independently.

2. Run migration to move all historical data from the legacy Solr cluster to the new Solr cluster.

All historical indexed data is copied over to the new cluster, never overwriting data that has already been inserted / updated through the live flows. This is the hard part! It takes quite a bit of work to move the massive amount of data that Fullstory indexes for our customers.

The Historical Data Migration section below expands on this step in detail.

3. Verify integrity of the new Solr cluster.

All indexed data in the new cluster is integrity checked against the source of truth data in the legacy cluster. Integrity checking must handle timing differences between writing to the legacy and new clusters in addition to the timing differences of the Solr commit intervals.

4. Cutover reads to the new Solr cluster.

All live flows use the new Solr cluster as the source of truth for search and visualizations. At this point, the legacy Solr cluster can be destroyed.

While each of these steps of the process could be talked about to a large extent, the most interesting of the infrastructure is the historical data migration. It contains the most moving pieces, requires high parallelization and coordination, and ultimately is the main driver of a successful migration. The technical details of this piece are explored next.

Historical Data Migration

With 100s of terabytes of structured data unevenly distributed across customers, we have to run specially designed processes to efficiently move data between clusters for a reasonable migration timeline. Our process relies on three core activities: parallelization, coordination, and time slicing.

Parallelization

A single process migrating our historical indexed data would take years to complete. For obvious reasons, this is not acceptable. One of the main design goals of the historical data migration process is to be parallelizable, providing the ability to scale up and down as needed.

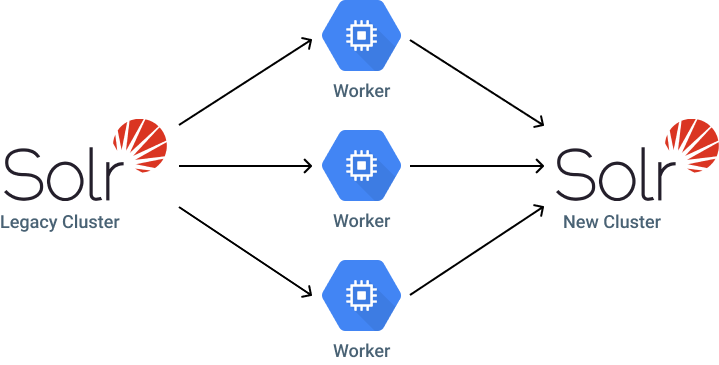

Data migration workers, parallelized, transferring historical data from the legacy to new cluster

Our data is naturally separated by customer and is amenable to parallel workers targeting each customer independently. Unfortunately, in practice, a single worker per customer would still take months to complete given the uneven distribution of data across customers.

To support useful parallelization, we time slice customer data based on length of retention periods and volume of data, assigning workers to process each time slice. As a result of this parallelization, the workers also require additional coordination to keep the process running smoothly.

Coordination

With all of the workers running in parallel attempting to migrate to / from the same Solr clusters, distributed coordination is paramount to ensuring workers aren’t stepping on each other’s toes or repeating completed work.

Coordination is handled through the use of Zookeeper. At a high level, we have a structure of ephemeral claims and persistent work nodes.

Ephemeral claim nodes are required to be created and held to act on any work slice. Making a claim for work is the first thing a worker does. It will continue to make claims as work is completed or skipped until it runs out of available work. Of note, the ephemeral property is important so that non-graceful process exits will result in any held claims being freed up automatically.

The work nodes contain the progress data. This data includes both the records processed and the latest cursor that should be used when picking the work back up upon process restart (to avoid repeating work).

Time Slicing

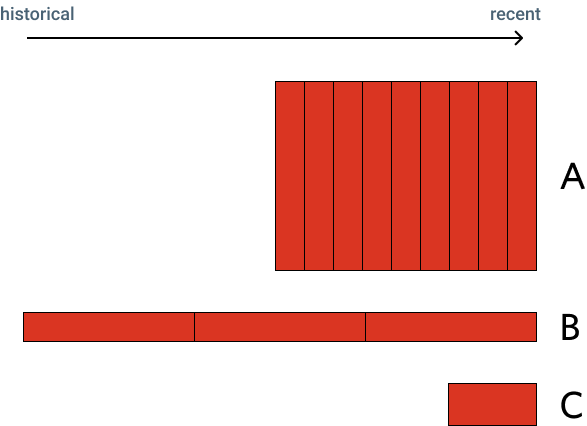

We have customers of all shapes and sizes with different retention periods and volumes of data. One customer might record the same amount of data in a week as another customer records in an entire three month period. We must account for these differences when driving our migration workers.

The time slicing is fairly straightforward:

Determine the total number of records and retention period for a given customer’s data.

Slice that customer’s data into time intervals that would produce an estimated N number of records per interval, where N is based on the number of records that we believe can be processed in reasonable time (e.g. an hour) by a single worker.

A migration worker will address a single time slice of a single customer at a time. In the following visual, customer A has a high volume dataset with average retention, customer B has a low volume dataset with long retention, and customer C has an average volume dataset with short retention. As shown, each dataset gets sliced up using different time intervals so that each migration work slice is close to the same level of effort for the migration workers.

Example work slicing for different retention periods and volumes of data.

Results

Through the use of parallelization, coordination, and time slicing -- even with fairly conservative settings -- Fullstory can safely and performantly migrate our entire analytics dataset to brand new database clusters whenever we need to.